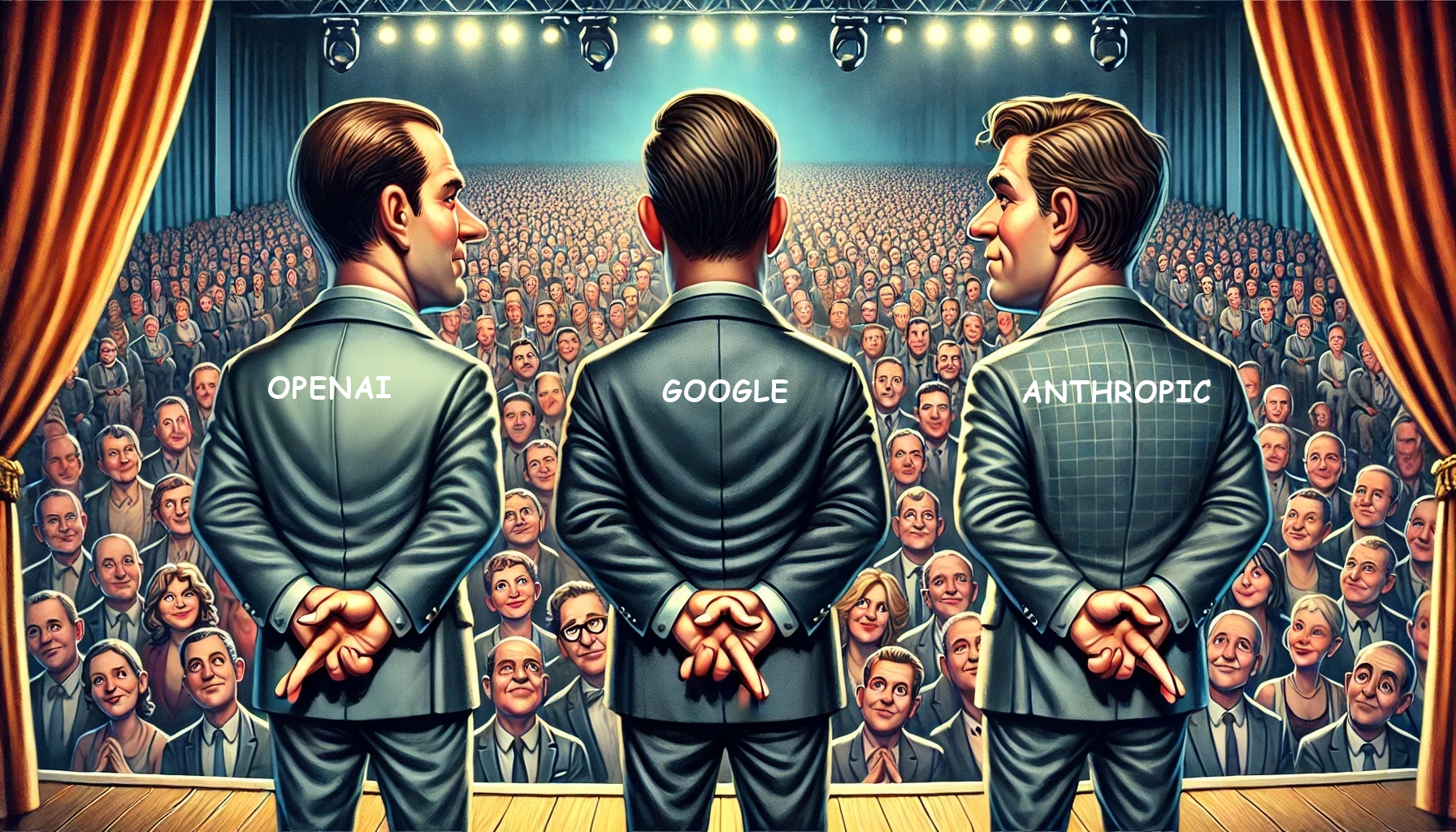

Liar Liar...

The broken promises by OpenAI, Google, and Anthropic highlight the challenges and complexities of developing and deploying AI technologies responsibly

The Biggest Broken Promises by the Big 3 AI Companies: OpenAI, Google, and Anthropic

In recent years, the rapid advancements in artificial intelligence (AI) have been accompanied by grand promises from leading AI companies. OpenAI, Google, and Anthropic have each pledged to revolutionize technology and society with their AI innovations. However, these promises have often fallen short, leading to significant scrutiny and criticism. This article explores some of the most notable broken promises by these AI giants.

OpenAI: Transparency and Ethical Concerns

OpenAI, once lauded for its commitment to transparency and ethical AI development, has faced numerous controversies that have tarnished its reputation.

Misleading Openness: Despite its name, OpenAI has been criticized for not being as open as it claimed. As early as 2016, the organization was aware that its moniker was misleading, yet it failed to correct this perception. This lack of transparency has raised questions about the company's true intentions and practices. Sam Altman, CEO of OpenAI, has acknowledged the need for greater transparency, stating, "We need to be more transparent about the capabilities and limitations of our models to manage expectations better" (Altman).

Copyright Violations: OpenAI has been accused of using vast amounts of copyrighted material without consent or compensation. This includes allegedly downloading YouTube videos and other documents without permission, leading to numerous lawsuits and accusations of intellectual property theft. Monika Bauerlein, CEO of the Center for Investigative Reporting, criticized this practice, saying, "This free rider behavior is not only unfair, it is a violation of copyright" (Bauerlein).

AI Safety Neglect: OpenAI pledged to dedicate 20% of its efforts to AI safety but has reportedly failed to deliver on this promise. A recent report highlighted the departure of key safety-related employees due to concerns about the company's commitment to safety. Max Tegmark, MIT professor and co-founder of the Future of Life Institute, emphasized, "Ensuring AI safety is paramount, and companies must allocate sufficient resources to address this critical issue" (Tegmark).

Governance Issues: Promises of significant outsider involvement in OpenAI's governance have not been kept. Additionally, there have been concerns about potential conflicts of interest involving CEO Sam Altman, who has not been fully transparent about his personal holdings in the company. This has led to questions about the company's governance practices and commitment to ethical standards.

Google: Rushed AI Implementations and Misinformation

Google's foray into AI, particularly with its generative AI search feature, has been marked by a series of missteps and broken promises.

Rushed AI Upgrades: Google's generative AI upgrade to its search engine was part of a broader industry trend inspired by OpenAI's ChatGPT. However, experts believe that Google rushed this upgrade, leading to numerous errors and odd behaviors in search results. This has raised concerns about the company's commitment to quality and accuracy. Prabhakar Raghavan, Google's SVP of Knowledge & Information, noted, "While we strive to innovate rapidly, we must also ensure that our implementations are robust and reliable" (Raghavan).

Inaccurate Summaries: Google's AI-generated summaries have been criticized for drawing from poor sources or defunct websites, leading to less useful and sometimes misleading information. Despite extensive testing, the AI has produced erroneous advice, such as suggesting people eat rocks or apply glue to pizza, highlighting the challenges of managing large language models (LLMs). Richard Socher, a key figure in AI for language research, remarked, "You can create a quick prototype with an LLM, but to ensure it doesn't suggest eating rocks requires significant effort" (Socher).

Election Misinformation: Google, along with other AI companies, pledged to combat deceptive use of AI during the 2024 election season. However, investigations have found that Google's AI models routinely provided inaccurate and harmful answers to election-related questions, failing to meet their promises of providing accurate information. Emily M. Bender, a linguistics professor, warned, "The problem with this kind of misinformation is that we're already swimming in it" (Bender).

Anthropic: Election Safeguards and Accuracy

Anthropic, a newer player in the AI field, has also faced criticism for not keeping its promises, particularly regarding election-related information.

Election Safeguards: Anthropic announced plans to direct users asking voting information questions to vetted sources like TurboVote.org. However, when tested, their Claude chatbot failed to implement these safeguards, providing inaccurate information instead. This discrepancy between their public promises and actual execution has raised questions about their commitment to accuracy. Josh Lawson, former chief legal counsel for the North Carolina State Board of Elections, stated, "A best practice is to redirect to authoritative information. And failure to do that could be problematic" (Lawson).

Delayed Implementations: Anthropic cited "a couple of bugs" as the reason for the delay in rolling out their election prompt. This delay has further eroded trust in their ability to deliver on their promises, especially in critical areas like elections where accurate information is paramount. Sally Aldous, a spokesperson for Anthropic, acknowledged the issue, saying, "We are working diligently to address these bugs and ensure our election safeguards are fully implemented" (Aldous).

Conclusion

The broken promises by OpenAI, Google, and Anthropic highlight the challenges and complexities of developing and deploying AI technologies responsibly. While these companies have made significant strides in AI innovation, their failures in transparency, accuracy, and ethical considerations underscore the need for greater accountability and oversight in the AI industry. As AI continues to evolve, it is crucial for these companies to not only set high standards but also to meet them, ensuring that their technologies truly benefit society.